About

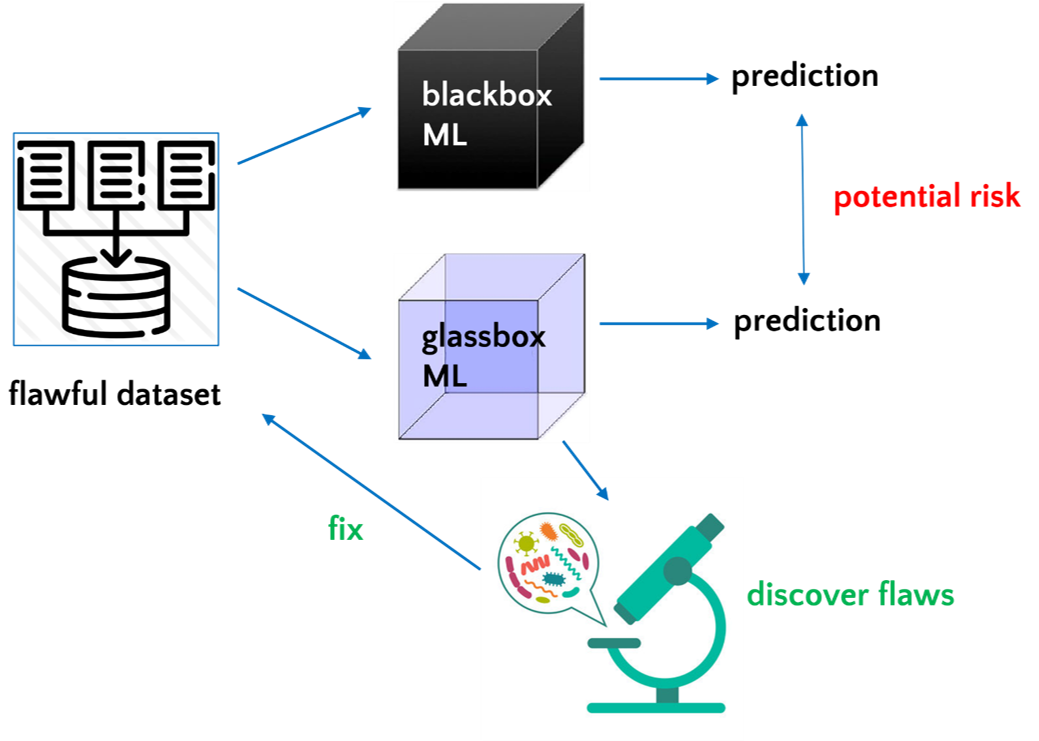

I am a researcher interested in the intersection of machine learning, optimization and human-model interactions. My research focuses on building interpretable machine learning models that people can easily debug, interact with and gain knowledge from.

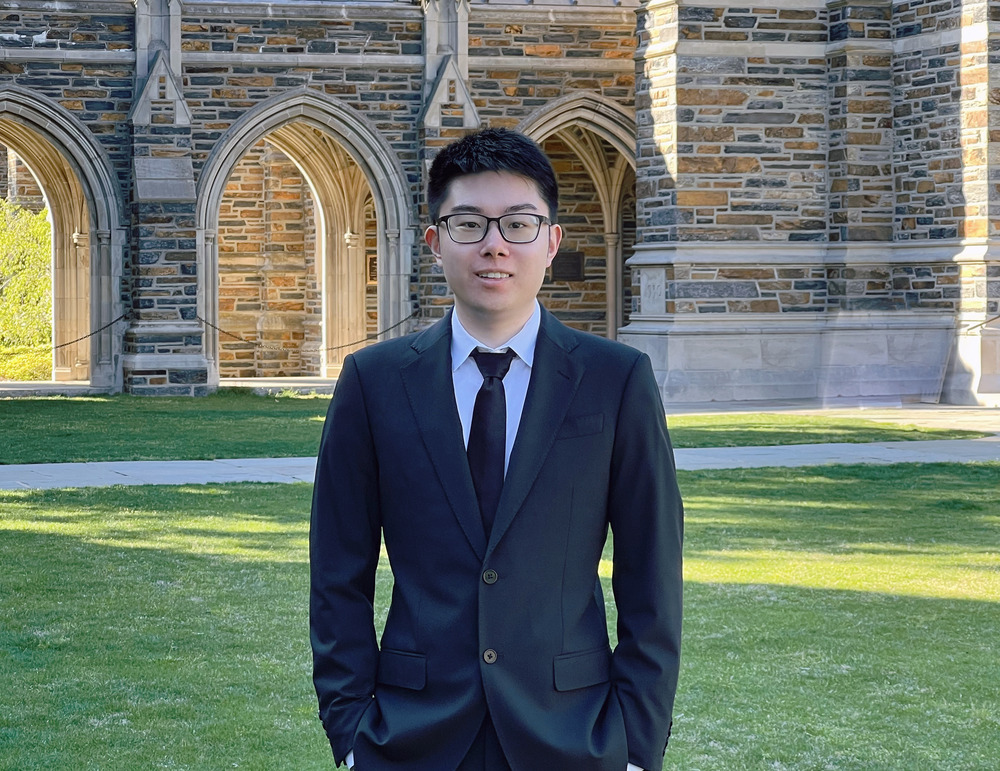

Currently, I work as a Quantitative Researcher at Citadel Securities doing systematic equities alpha research. I obtained my Ph.D. in Computer Science from Duke University, under the supervision of Prof. Cynthia Rudin. Prior to joining Duke, I completed my B.S. in Computer Science from Kuang Yaming Honors School, Nanjing University in 2018.